An astounding 8.4 billion voice assistants are in use today. That’s more than one device per person on Earth. This shows that conversational AI is becoming an essential part of daily life.

Businesses are using voice agents in customer support, sales, and smart devices. But scaling these systems remains a challenge.

Every company that tries to scale may face problems like laggy replies when loads increase, shaky performance in multilingual contexts, and growing infrastructure costs.

In this article, you’ll see how Murf Falcon solves these challenges and sets itself up as a powerful foundation for global, enterprise-grade conversational AI.

Why Scaling Conversational AI Is Harder Than It Looks

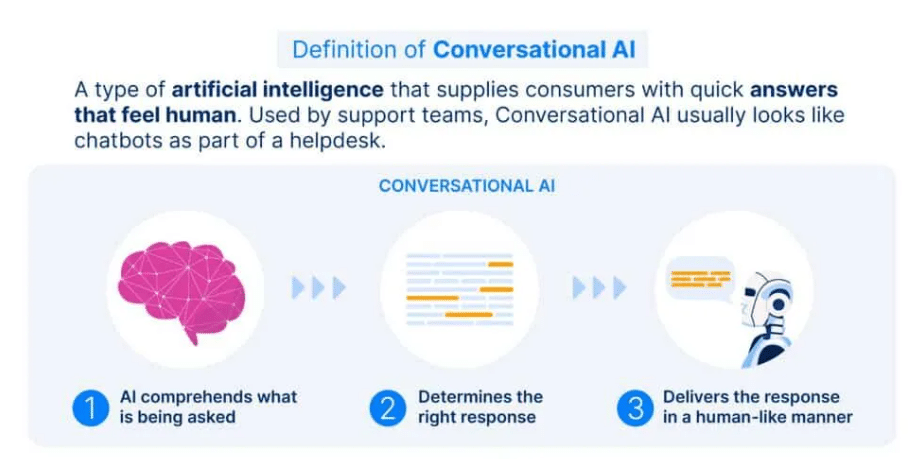

What comes to your mind when you hear the word ‘voice AI’? Well, a simple system where you ask or speak something, AI listens to it, and then replies accordingly. Correct?

But scaling that to thousands or tens of thousands of concurrent users in real-time and across geographies is challenging.

The Latency Dilemma: Every Millisecond Matters

Latency means delays. And delay risks your reputation. Consider you call a support line, and there’s a few seconds’ pause before the voice agent responds. That delay feels robotic. It kills the rhythm of a conversation. Users might hang up or become frustrated. Slow responses can lead to misunderstanding or even transaction failures. You can reduce friction at the very start of a conversation by using a vCard QR code generator, allowing users to reach the right support channel instantly without delays or manual entry.

Most traditional voice APIs can handle a few calls gracefully. But as soon as load increases, latency grows, which breaks the flow of a conversation, and the experience breaks down. For a global voice-first product, these delays are unacceptable. The stakes are high as poor latencies = poor customer experience = frustrated users. For real world teams, pairing scalable AI infrastructure with a stable business line provider like Quo helps ensure callers always reach support without added friction.

Concurrency at Scale: The 10,000-Call Problem

It’s one thing to run a demo with ten or a hundred users. It’s another to support 10,000 simultaneous conversations without dropping packets, skipping beats, or choking the system.

Many AI voice platforms simply weren’t built to scale with real-world demands. When the load increases, they either queue requests, which leads to long wait times, or degrade audio quality. When you’re operating a contact center or deploying voice agents for a global event, this is a hard limit.

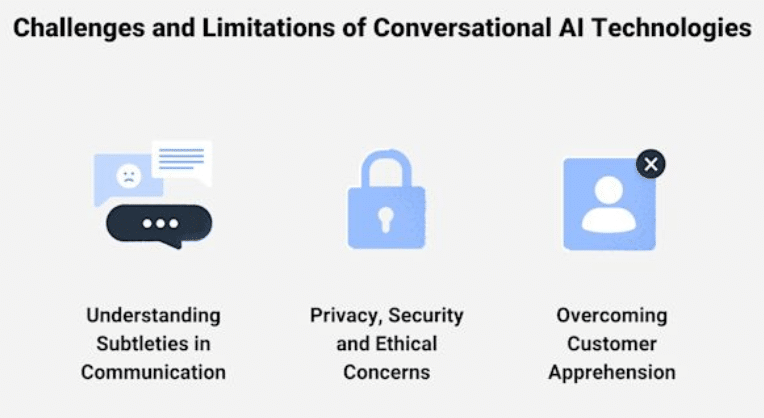

The Multilingual Challenge: Beyond Simple Translation

Scaling internationally isn’t just a matter of translating text. People code-switch. They mix languages mid-sentence. They use regional dialects, colloquialisms, slang, and idioms and not all LLM frameworks can deal with it. The true definition of a truly global voice agent is that you need more than surface-level translation; you need native-level fluency.

Similarly, understanding accents, managing pronunciation, and preserving the speaker’s voice quality across languages is not trivial. Many TTS (text-to-speech) systems collapse when they encounter challenges, such as code-mixing or multilingual conversational flows. The result is awkward speech, unnatural inflection, and broken user trust.

Murf Falcon: Built for the Enterprise-Scale AI Era

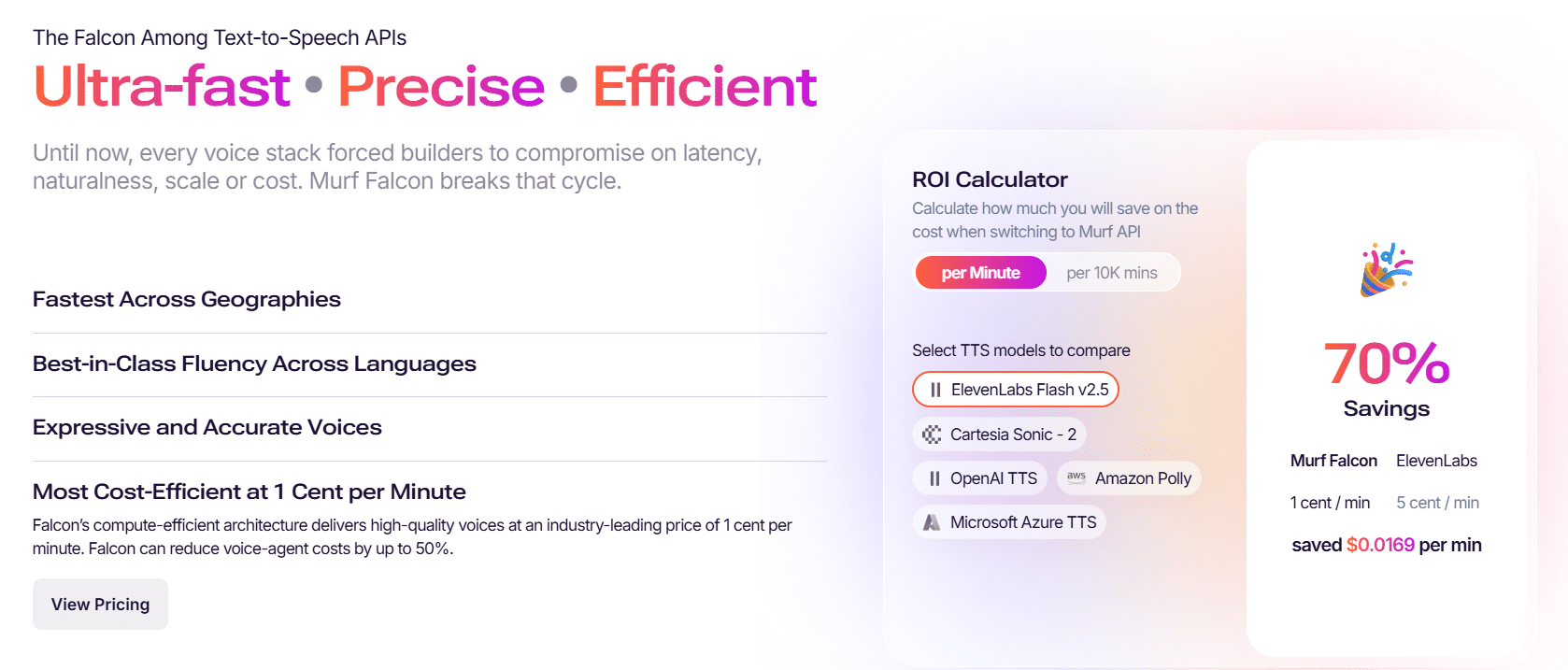

Murf Falcon changes the game. It is a voice API that is built for real-time and scalable conversational AI. It helps with:

Sub-150ms Latency: Redefining Real-Time Communication

Falcon’s architecture is optimized to deliver sub-150 millisecond time-to-first-audio, and its internal model latency clocks in around 80ms. It holds up in real-world conditions when your users are on different continents.

Consider voice agents that respond in real time, regardless of whether a user is calling from Berlin or São Paulo. That fluidity preserves conversational rhythm, making interactions feel genuinely human.

10,000 Concurrent Calls, Proven Scale Without Compromise

Falcon can handle up to 10,000 concurrent voice streams without degrading performance. Thanks to its scalable backend and intelligent load management. It maintains high audio fidelity and consistent latency even during peak times.

For enterprises, this means deploying Falcon-powered voice agents in contact centers, large-scale virtual event platforms, or global services without fear of bottlenecks or system failure.

Data Residency in 10+ Countries, Comply While You Scale

These days, data sovereignty matters. Many regulated industries, like banking, healthcare, or government, cannot risk sending voice data to any foreign cloud. Falcon supports data residency in over 10 countries, thereby enabling global reach while respecting local regulations.

This means your voice data can stay within regionally compliant infrastructure. It reduces delays due to network hops and increases trust by enterprise clients who care about privacy and compliance.

On-Prem Deployment for Maximum Control

Falcon supports hybrid and on-prem deployments. Organizations that need full control over infrastructure or prefer to host in a private data center get that flexibility with Falcon. In addition, utilizing Murf Falcon can significantly reduce involuntary churn by ensuring a seamless and reliable voice AI experience, which is crucial for maintaining customer satisfaction and retention.

On-prem deployment offers enhanced security, lower latency, and compliance with strict data governance policies. For mission-critical systems like financial services or smart city deployments, this is a big deal. Moreover, integrating cross-platform app development services allows organizations to streamline their application delivery, ensuring that user interfaces remain consistent across various devices while adhering to stringent security and compliance requirements.

Key Use Cases: Real-World Applications of Falcon

So, how are companies putting Falcon to work? Here are some compelling real-world scenarios:

Customer Support Automation

Let us suppose a global retailer using Falcon to deploy virtual agents across its customer support system. During peak hours, thousands of customers call in simultaneously. But the AI-powered voice agents powered by Falcon handle every call smoothly, quickly, and naturally.

Response times are minimized, wait queues are shortened, and support agents are freed for more complex tasks. The business saves on overhead, and customers get fast, human-like assistance at any time of the day.

Voice Commerce & Interactive Sales

In voice commerce, every second counts. A shopper speaks to an AI assistant to browse, ask for help, or complete a purchase.

Slow replies or awkward speeches kill conversion. Falcon lets you build interactive voice sales assistants that provide instant and natural responses, so businesses can guide customers seamlessly through upsells, cross-sells, or checkouts.

IoT Voice Interfaces

Consider a smart home or a connected device in a multilingual household. Falcon can power devices from home assistants and appliances to wearables and in-car systems with responsive, context-aware voice interactions.

Even if a user switches from English to Spanish mid-command, Falcon’s multilingual model handles it smoothly. And since the architecture is built for low latency and on-prem options, devices stay snappy and secure.

Performance at Scale: Numbers That Matter

To put some numbers behind the promise:

- 10,000 concurrent calls fully supported without latency bottlenecks

- Sub-150ms average latency where real-time response feels genuinely natural

- 80ms model inference optimized for speed without sacrificing quality

- 35+ languages with native fluency and in-sentence code-switching

- Data centers in 10+ countries with data residency support

- Enterprise-grade reliability with high uptime and scalable architecture

These numbers reflect Falcon’s advanced design and capability. It’s built specifically for production use cases where scale and reliability matter.

Why Murf Falcon Is the Backbone of Scalable Voice AI

Here’s what really makes Falcon stand out:

- No Compromise: You don’t have to trade off latency to scale, or pay a premium for multilingual support.

- Global Reach, Local Compliance: Thanks to data residency and on-prem deployment, Falcon respects data sovereignty without sacrificing performance.

- Cost Efficiency: With optimizations built into its architecture, Falcon delivers enterprise-scale voice AI at a fraction of the cost.

- Developer-Friendly: Easy to integrate, flexible to deploy, Falcon works whether you’re building a contact center, a voice commerce assistant, or an IoT voice interface.

- Built for Production: This is not a demo-layer API; it’s intended for real-world applications where uptime, latency, and concurrency matter.

The Future of Conversational AI Is Built on Falcon

We’re living in a world where voice is becoming the most natural interface for how humans and machines connect. But scaling conversational AI globally, fast, and in real time is hard. Latency, concurrency, and language barriers hold many businesses back.

But now, no more. Murf Falcon solves these problems.

It gives you ultra-low latency, truly global scale, native multilingual support, and enterprise-level control. That’s the infrastructure you need if you want your voice agents to feel human, responsive, and reliable, not clunky or constrained.

If you’re an enterprise architect, a product leader, or a developer building voice-first systems, it’s time to build on Falcon. Don’t settle for voice APIs that force trade-offs. Choose the backbone that’s made for growth, performance, and real human interaction.

Get started with Murf Falcon today and power the next generation of conversational AI.