In the evolution of AI video generation, the real breakthrough is not simply generating motion from text — it is understanding and integrating multiple forms of reference into one coherent, controllable output. This is where seedance 2 stands apart.

Seedance 2 introduces a fully integrated multimodal reference system that allows creators to upload text, images, video clips, and audio files — not just as input material, but as active reference objects. Every uploaded asset can serve as either a subject of transformation or a reference source for motion, composition, style, sound, or narrative tone.

This shifts AI video generation from “prompt-based guessing” to true directed creation.

Multimodal Reference: Refer to Anything, Control Everything

With seedance 2, users can:

- Upload images to define characters, composition, or visual style

- Upload videos to replicate motion patterns, camera language, or special effects

- Upload audio to guide rhythm, pacing, and emotional tone

- Use natural language prompts to describe narrative logic and scene transitions

What makes seedance 2 particularly powerful is that references are not limited to surface appearance. The system can interpret:

- Motion dynamics

- Cinematic camera movement

- Visual effects timing

- Environmental interaction

- Emotional expression

- Narrative continuity

- Sound atmosphere

As long as the prompt clearly defines what should be referenced and how it should be used, seedance 2 can distinguish between transformation targets and inspiration sources.

In practical terms:

Seedance 2 = Multimodal Reference Capability (reference anything) + Strong Creative Generation + Precise Instruction Understanding.

This means users can combine multiple reference materials into a single, coherent cinematic output without losing structural stability.

Case Study: Emotional Narrative with Multimodal Reference

To demonstrate the multimodal capability of seedance 2, consider the following scenario.

Prompt:

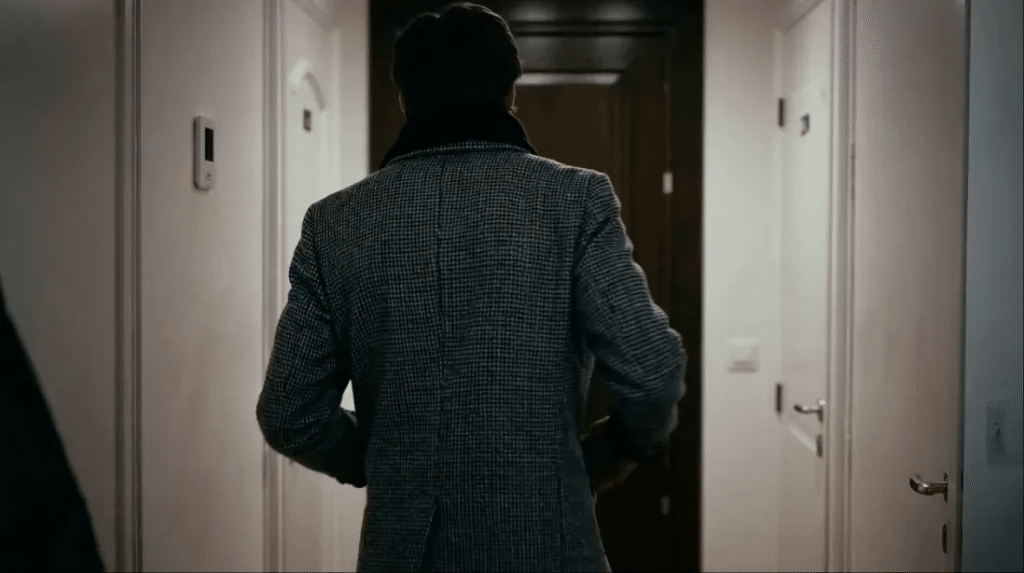

“Man @Image1 walks tiredly down a corridor after work. His footsteps slow down, and he finally stops in front of his home door. Close-up shot of his face. The man takes a deep breath, adjusts his emotions, releases negative feelings, and becomes calm. Close-up of him searching for his keys, inserting them into the lock. After entering the house, his little daughter and a pet dog run toward him happily for a warm embrace. The indoor atmosphere is cozy. Natural dialogue throughout.”

Visual and Motion Interpretation

In this example, @Image1 defines the identity and appearance of the male character. Seedance 2 uses this image reference to maintain facial consistency throughout the entire sequence.

The system interprets the emotional arc described in the prompt:

- Physical fatigue expressed through slowed footsteps and posture.

- Emotional shift during the close-up breathing moment.

- Fine-motor action while searching for keys.

- Warm tonal transition upon entering the house.

- Dynamic interaction between child, dog, and father.

Rather than treating the scene as disconnected actions, seedance 2 preserves narrative flow. Camera language shifts from corridor tracking to close-up framing, then to interior wide shots — all guided by the prompt’s cinematic cues.

Audio and Emotional Synchronization

Because seedance 2 supports audio references and natural dialogue cues, the system can incorporate environmental sound layers such as:

- Corridor footsteps

- Subtle breathing sounds

- Key metal interaction

- Indoor ambient warmth

- Child laughter and dog movement

The multimodal reference system allows both visual and auditory layers to align with the emotional transformation described in the text.

The result is not just a sequence of actions, but a cohesive short narrative film.

Why Multimodal Reference Matters

Traditional AI video tools rely heavily on text prompts alone. This often leads to instability, identity drift, or inconsistent motion logic.

Seedance 2 overcomes this by grounding generation in multimodal references. When you upload an image, the character remains consistent. When you upload a video, the motion logic becomes structured. When you upload audio, the rhythm becomes intentional.

Instead of approximating intent, seedance 2 interprets it.

This ability to reference “anything” — action, effect, form, camera movement, character, scene, or sound — transforms AI generation into a controllable directing process.

Conclusion

Seedance 2’s multimodal reference capability represents a major advancement in AI-driven filmmaking. By combining reference flexibility, creative generation strength, and precise instruction understanding, seedance 2 enables creators to produce emotionally layered, structurally coherent, and visually stable video narratives.

For storytellers, marketers, and creators who demand control rather than randomness, seedance 2 offers a powerful new paradigm: reference anything, generate with precision.