When talking about ‘internet bots’, our first thoughts often go to the malicious bots used by hackers and cybercriminals to perform various destructive tasks from brute force attacks to malware injection to full-scale data breach attacks.

However, it’s important to note that there are actually good bots, owned by reputable companies that are actually performing beneficial tasks for the website and/or its users. Googlebot, for example, is responsible for crawling and indexing all websites on the internet so Google can display and rank this website on its search result pages.

Thus, the ideal approach in dealing with these bots is to block all malicious bots while allowing the good bots and legitimate human users to visit our site, but in practice, this can be easier said than done.

With close to 50% of the whole internet traffic comes from bot activities, managing these bots are becoming increasingly important, and here we will discuss how to tell the difference between good bots and bad bots, and how to manage malicious bots on your network.

Good Bots VS Bad Bots: The Owners/Operators

A key consideration when differentiating between good bots and bad bots is that good bots are owned by reputable companies. They don’t have to be giant companies like Google, Amazon, or Facebook, but at the very least you’ll be able to find information about the owner of these bots easily and the company should be in a relatively good reputation.

Good bots will never hide their owners/operators from you, and this can be the clearest distinction when attempting to differentiate good bots from bad bots.

However, it’s worth noting that there are malicious bots that are impersonating these good bots’ identity so they might seem like they are owned by these reputable companies while in fact, they are not.

Good Bots VS Bad Bots: Your Site Policies

Another important distinction between good bots and bad bots is that most good bots will follow your site’s directives and policies, especially your robots.txt configuration.

When a bot follows your directives, then it’s most likely it’s a good bot and vice versa.

Good Bots VS Bad Bots: Activities

An obvious difference between good bots and bad bots is the activities/task they perform:

Tasks of Good Bots

Below are the common types of good bots available on the internet, and their activities:

- Search Engine Bots

Pretty self-explanatory, these bots are crawling and indexing various websites on the internet for search engine ranking purposes. Googlebot and Bingbot are two examples of search engine bots. Also called ‘spider’ bots because they ‘crawl’ the web page’s content, just like how a spider crawls.

- Data Bots

Data bots refer to bots that provide real-time information like weather, currency rates, share prices, and so on. Siri and Google Assistant are actually examples of data bots, but there are also various other bots from many different companies with their own specific tasks to share and update information.

- Copyright Bots

This type of bots also crawls various websites to scan content, similar to search engine/spider bots. However, they specifically search for copyrighted web content that has been illegally published or plagiarized. A popular example is YouTube’s Content ID bot which scans YouTube videos for copyrighted songs, videos, and images. Copyright bots are very useful for any business publishing copyrighted content.

- Chatbots

Implemented on many websites and apps to replace human customer service in performing conversations with human users. Chatbots can allow businesses to provide more cost-effective customer service 24/7 while reducing human resources costs.

Tasks of Bad Bots

- Account Takeover (ATO) Bots

Bots can be programmed to perform credential cracking (brute force) and credential stuffing attacks in an attempt to gain unauthorized access to accounts and systems. If you want to protect and identify ATO attempts and monitor your website for suspicious activity you need an account takeover protection solution like DataDome that will protect your website and server and run on autopilot.

- Content Scraping Bots

Bots can scrape content on a web page and use the content for various malicious purposes, for example leaking the information in the content to competitors/to the public, publishing the content on another site to ‘steal’ the website’s SEO ranking, etc.

- Click Fraud Bots

This type of bots fraudulently clicks on ads to skew the ad’s performance and cost.

- Spam Bots

These bots distribute spam content like links to fraudulent websites, unwarranted emails, and so on, for example on your site’s blog comment section and social media comment sections.

- Spy Bots

These bots monitor data about individuals or businesses for various malicious purposes (i.e. selling it to other businesses, launching credential cracking attacks, etc. )

How To Prevent Bad Bots

While we can use various approaches in detecting and managing bad bots, there are three most important ones:

1. Monitor Your Traffic

The most basic yet important approach in preventing bad bot activities is to monitor your traffic using your go-to analytics tools. You can, for example, use the free and handy Google Analytics to check the following symptoms of bad bot activities:

- Spike in pageviews: a sudden and unexplained increase in pageviews can be a sign of bot traffic. However, pay attention to other factors.

- Increase in bounce rate: similar to the above, if a lot of users suddenly leave a page immediately without clicking on anything and viewing another page, it can be a symptom of bot activities.

- Dwell time: abnormally high or low dwell time or season duration can also be a major sign of malicious bot activities. Session duration should remain relatively stable.

- Conversion rate: a significant decrease in conversion rate, for example, due to an increase of fake account creations.

- Abnormal location: Significant number of traffic from an unexpected location, for example from countries you don’t serve, might come from bots masking its location via VPN

When you see any of these signs, you should take the necessary measures to manage the identified malicious bot activities.

2. Implement Whitelist and Configure Robots.txt

Setting up policies in your site’s robots.txt file is very important in managing both good bots and bad bots.

Essentially the rules in your robots.txt will specify which pages can and cannot be crawled by the bot, links they should/shouldn’t follow, and other important directives. As discussed, good bots will follow these rules, while bad bots won’t.

A whitelist/allowlist, as we know, is the list of which any bots that aren’t listed on the whitelist can’t visit your site. You should maintain a whitelist of allowed bot user agents so that you will ensure these beneficial good bots can access your resources while blocking the bad bots.

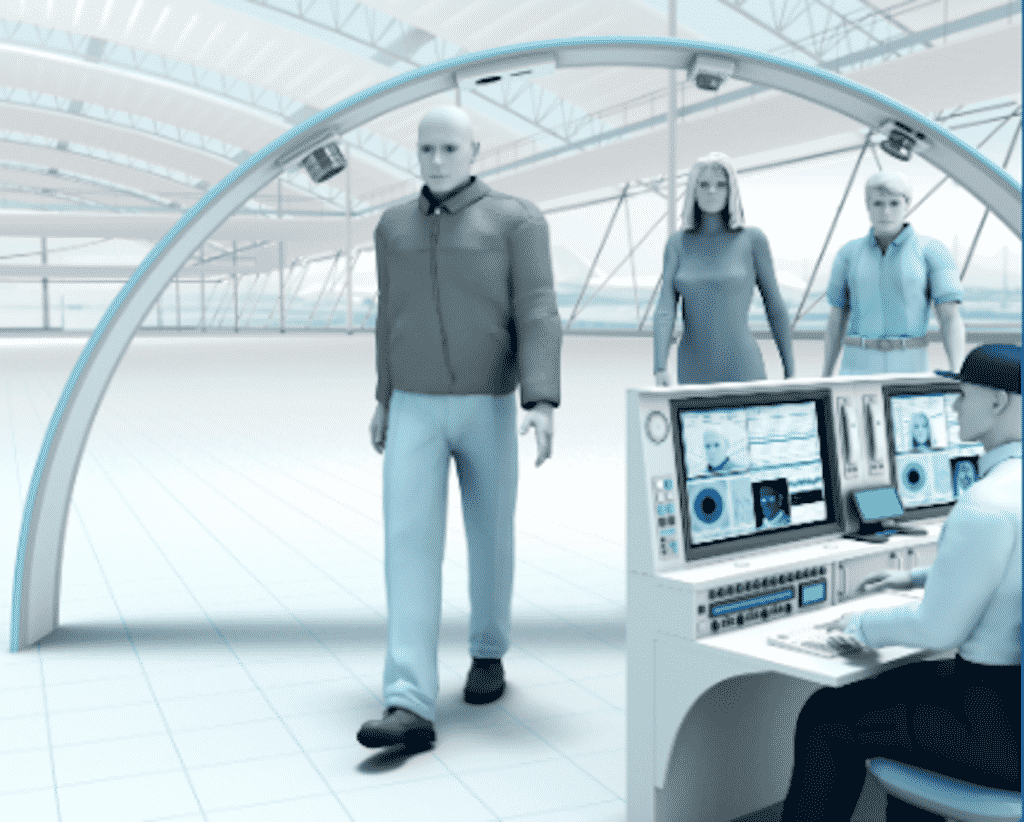

3. Install a Good Bot management Solution

A bot management software is required to effectively detect and filter bad bots from good bots, mitigating, or blocking bad bots while allowing good bots to access the website and resources.

Tools like DataDome can identify good bots from bad bots in real-time and automatically block bad bots from accessing your resources.

A good bot management solution should:

- Differentiate between bots and legitimate human users

- Identify the source of bot traffic and whether it is owned by a reputable owner

- Filter bot traffic based on IP reputation

- Perform behavior-based analysis to detect malicious bots impersonating human behaviors

- Throttle/rate-limit any bots that perform too many requests on the site

- Challenge/test the bot via CAPTCHA, JavaScript fingerprinting, and other methods when required

- Manage/mitigate the malicious bot traffic depending on a case-by-case basis (i.e. not all bots should be thoroughly blocked)

End Words

While bad bots are becoming much more sophisticated at launching malicious attacks, we have to remember that there are good bots that are actually beneficial for our business. So, simply blocking all bots isn’t an option since it will hurt your site’s experience and your business performance.

Thus, differentiating between good bots and bad bots and having the correct approach in managing these bots—as discussed above—, is very important. AI-based bot management systems, such as Datadome, remain the most effective way to filter out bad bots from good bots and protect your network.