You know the feeling. It hits you in the shower, during a long drive, or right as you’re drifting off to sleep. A melody. A perfect hook. A rhythm that perfectly captures your current mood. It’s vivid and loud in your mind, playing on a loop.

But then, reality sets in. You sit down to capture it, and the barrier rises. Maybe you don’t know how to play the piano. Maybe complex music production software (DAWs) looks like the cockpit of a fighter jet to you. Or perhaps, you simply don’t have the thousands of dollars required to hire session musicians and a producer.

The Graveyard of Lost Songs

This is the “Agitation” phase of creativity. That brilliant idea doesn’t just fade; it becomes a source of frustration. It joins the graveyard of lost songs—potential hits and heartfelt dedications that never made it out of your head. The gap between your taste and your technical skill feels like a canyon that cannot be crossed. You are a creator without a brush, a composer without an orchestra.

The Bridge to Your Sound

But what if that gap didn’t exist? What if you could describe the sound in your head—the mood, the instruments, the vibe—and have a virtual virtuoso play it back to you in seconds?

This is where AIsong.ai enters the narrative. It is not just a tool; it is a translator. It takes the raw, messy, human language of emotion and converts it into the structured, universal language of music.

A Friday Night Experiment

I have to be honest with you—I was skeptical. As a writer, I value the “human touch.” The idea that an algorithm could understand the nuance of a heartbreak ballad or the grit of a cyberpunk synth-wave track seemed far-fetched.

I decided to put aisong.ai to the test on a rainy Friday evening. I didn’t want to create a generic pop song; I wanted something specific. I wanted a lo-fi hip-hop track with jazzy piano chords, rain sounds in the background, and a nostalgic, lonely vibe.

I typed that exact description into the prompt box. I didn’t fiddle with equalizers. I didn’t worry about chord progressions. I just told the story of the sound I wanted.

The “Magic Trick” Moment

In less time than it took to brew my coffee, the progress bar hit 100%. I put on my headphones and pressed play.

I was expecting robotic beeps. What I got was *atmosphere*. The piano sample had a dusty, vinyl crackle to it. The beat dragged slightly behind the snare, giving it that human “swing” that usually takes producers years to master. It wasn’t just audio; it was a mood. It was exactly what I had pictured, pulled directly from my brain and rendered into an MP3.

How It Works: The “Text-to-Audio” Alchemy

To understand why aisong.ai feels different, we have to look under the hood—but let’s skip the technical jargon and use an analogy.

The Digital Composer Metaphor

Imagine you are a film director. You don’t hold the boom mic, and you don’t sew the costumes. You have a vision, and you direct a team of experts to execute it.

Traditional music production requires you to be the director, the cameraman, the actor, and the editor all at once. aisong.ai changes your role back to the Director.

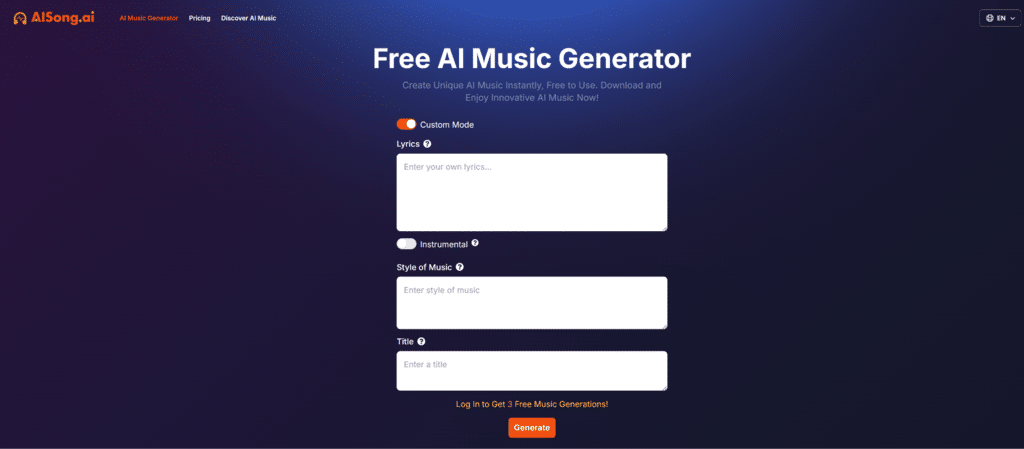

- The Brief (Input): You provide the script. This can be your own lyrics, or just a description of the style (e.g., “Upbeat 80s synth-pop about driving down a neon highway”).

- The Orchestra (Processing): The AI analyzes millions of musical patterns. It understands that “sad” often implies minor keys and slower tempos, while “energetic” might call for a driving bassline and 128 BPM.

- The Premiere (Output): The system generates a unique, royalty-free track that has never existed before.

From “Robotic” to “Resonant” (The Before & After)

- Before: Older AI music tools sounded like ringtones from 2005. They lacked structure. A song would start with a guitar and randomly switch to a flute without warning.

- After: The engine behind aisong.ai understands song structure. It knows a song needs an Intro, a Verse, a Chorus, and an Outro. It builds tension and releases it, mimicking the emotional arc of human composition.

Why “Good Enough” is No Longer the Standard

In the world of content creation, music is often an afterthought. We settle for generic stock music because it’s “safe.” But your audience is smart. They can tell the difference between a track that was pasted on and a track that was crafted for the content.

The Visual Breakdown: aisong.ai vs. The Old Ways

Let’s look at the numbers. Why are creators switching from stock libraries to generative AI?

| Feature | Traditional Stock Music | Hiring a Human Composer | AI Song Generator |

| Cost | $20 – $200 per track | $500 – $5,000+ per track | Fraction of a cent / Monthly Sub |

| Speed | Hours of searching | Weeks of production | Seconds |

| Uniqueness | Low (Thousands use the same track) | High (Bespoke) | High (Generated unique every time) |

| Customization | Zero (You get what you get) | High (But costs extra for revisions) | Unlimited (Regenerate until perfect) |

| Ownership | Licensing headaches | Complex contracts | Clear usage rights |

| Vibe Match | “Close enough” | Perfect | Precise (Based on your prompt) |

Breaking the “Stock Music Fatigue”

We have all heard that one ukulele song in a thousand YouTube tutorials. It’s annoying. It signals “low effort.” By using AI to generate a fresh track, you signal to your audience that you care about the details. You aren’t recycling; you are innovating.

Who is This For? (Spoiler: It’s You)

You might be thinking, “I’m not a musician, so do I really need this?” The answer lies in how we consume content today. Audio is 50% of the video experience.

For the Content Creator

You are editing a travel vlog about Tokyo. You need a track that blends traditional Japanese Koto instruments with a modern Trap beat. Good luck finding that on a stock site. With aisong.ai, you simply ask for it.

For the Marketer

You are running an ad campaign. You need five variations of background music to A/B test which one drives more clicks. Hiring a composer for five tracks is budget suicide. Generating five tracks with AI takes three minutes.

For the Songwriter

You write lyrics but can’t play an instrument. You can feed your lyrics into the system, choose a genre, and hear your words sung back to you. It’s the ultimate demo-creation tool.

The Future of Sound is Collaborative

There is a fear that AI will replace artists. I view it differently. I view it as an amplifier.

When the camera was invented, painters feared it would kill art. Instead, it freed painters from the need to be realistic, leading to Impressionism and Abstract art. Similarly, aisong.ai frees you from the technical drudgery of mixing and mastering.

Your Imagination is the Only Limit

The tool doesn’t know what “heartbreak” feels like—but you do. The tool doesn’t know the excitement of a new product launch—but you do. You provide the soul; the AI provides the body.

A Call to Create

Don’t let that melody in your head die. Don’t let your video content suffer from generic, overused background noise. We are living in a golden age of creative tools, where the barrier to entry has been smashed to pieces.

The orchestra is warming up. The studio is open 24/7. The only thing missing is your direction.

Ready to conduct your masterpiece?

Step onto the podium. It’s time to make the world listen.