An engineer who builds real-time computer vision systems for military applications and runs LLM infrastructure on OpenShift evaluated hackathon projects designed around intentional instability — and recognized the same failure patterns he spends his career preventing in production ML pipelines.

In January 2024, Zillow reported a $304 million write-down on homes purchased by its AI-powered iBuying algorithm. The model had performed well during training and initial deployment. It continued to perform well by every internal metric the team monitored. The problem was that the housing market shifted underneath the model’s assumptions — a phenomenon ML engineers call concept drift — and by the time the degradation became visible in business outcomes, the damage was already catastrophic.

Dmytro Zaharnytskyi has spent seven years navigating the gap between “the model is running” and “the model is correct.” As a Senior ML/MLOps Engineer at Red Hat, he builds the infrastructure that keeps machine learning systems honest — LLM-as-a-Service architectures on Kubernetes and OpenShift, cloud-native ML platforms on AWS, and the monitoring systems that detect when models begin to drift. Before Red Hat, he built real-time computer vision systems for military applications, where model drift does not produce quarterly write-downs but operational failures with immediate consequences.

System Collapse 2026, organized by Hackathon Raptors, gave Zaharnytskyi a batch of eleven projects built around the premise that his career exists to prevent: systems where degradation, drift, and failure are not bugs to be patched but features to be embraced. He evaluated each through the lens of an engineer who builds the guardrails around models that want to drift.

The Feedback Loop That Destroys Its Own Predictions

The highest-scoring submission in Zaharnytskyi’s batch was System Calm by team 473 — a meditation application for anxious systems that scored a perfect 5.00 across all three evaluation criteria. The project’s premise is deceptively simple: a system that monitors its own performance and, upon detecting stress, deploys increasingly aggressive “help” interventions. The help degrades performance. The degraded performance triggers more help. The loop accelerates until the system collapses into four simultaneous instances with overlapping voices, culminating in a BSOD-style terminal event.

“The feedback loop is real,” Zaharnytskyi explains. “The app monitors itself, responds to stress by adding more ‘help,’ and that actually degrades performance. The collapse into four instances with overlapping voices and the BSOD-style resolution are memorable and well executed.”

For an ML engineer, this is not an abstract scenario. It is the observability paradox that plagues production machine learning. Monitoring a model’s behavior requires instrumentation. Instrumentation consumes resources. Resource consumption affects model latency. Latency affects prediction quality. In high-throughput inference pipelines — the kind Zaharnytskyi runs on OpenShift — the monitoring layer itself can become the dominant source of performance degradation during load spikes.

The phenomenon has a name in MLOps: the observer effect. Prometheus scraping metrics from a GPU inference server every five seconds introduces negligible overhead at baseline. At 90% GPU utilization, those same scrapes compete with inference workloads for memory bus bandwidth. The monitoring designed to detect degradation contributes to the degradation it is designed to detect. System Calm compresses this production pattern into a visceral, interactive demonstration.

“The system design is coherent,” Zaharnytskyi notes. “Anxiety drives both visuals and behavior, and the self-stress mechanism is implemented in detail. The concept is clear and distinctive, and the technical execution is solid.” He also flagged a practical concern: “The network flood during collapse uses external services and can consume bandwidth and hit CORS or rate limits. If you ever soften it for production, you could add a toggle or reduce the number of external requests.” The instinct is telling — even when evaluating art that celebrates system failure, the MLOps engineer in him identifies the production risks.

Cascading Failures Across Distributed Nodes

In distributed ML training — the kind of large-scale model training that happens across GPU clusters — a single slow worker can degrade the entire training run. Gradient synchronization protocols like AllReduce require every worker to complete its batch before any can proceed. One node with a thermal throttling GPU does not simply slow itself; it gates the throughput of every other node in the cluster. The failure cascades not through code but through dependency.

Fractured World by Team Baman implements this cascading dependency pattern as a game mechanic. The project builds what its developers call a CascadeEngine — a system of interconnected nodes where failures propagate through defined channels with configurable propagation rates, decay functions, and feedback loops. Failures do not remain local. They ripple through the network according to rules that the system itself evolves.

“Fractured World delivers a strong system-design entry,” Zaharnytskyi observes. “The CascadeEngine — propagation, decay, feedback loops — and the ‘Keg Principle’ show a clear view of emergent behavior. The ‘Emergence on Collapse’ theme is well implemented.”

The “Keg Principle” that Zaharnytskyi references is the project’s term for threshold-triggered cascade events — the moment when accumulated pressure crosses a critical boundary and the system transitions from stressed-but-functional to actively failing. In ML pipelines, this threshold manifests when a feature store’s latency exceeds the prediction timeout, causing the inference service to fall back on default values, which degrades model accuracy, which triggers retraining requests, which load the feature store further.

What makes Fractured World architecturally interesting from an MLOps perspective is that it separates the propagation mechanic from the failure trigger. In production ML systems, this separation is the difference between a well-designed circuit breaker and a poorly designed one. A circuit breaker that trips based on local error rates (the trigger) but only affects a single service (the propagation) contains blast radius. A circuit breaker that trips based on downstream timeout rates and propagates by triggering upstream retries amplifies failures rather than containing them. Fractured World lets users observe both patterns and develop intuition for how cascade topology determines cascade severity.

“Technical execution is solid,” Zaharnytskyi adds. “The AI integration and surprise score logic are well integrated. Visually and conceptually cohesive, with a clear ’emergence on the edge of chaos’ narrative.”

Debugging as Adversarial Model Training

Solo Debugger by Abhinav Shukla reimagines the act of debugging as combat — specifically, as the power-fantasy progression system from the anime Solo Leveling. Errors appear as monster cards with countdown timers. Defeating them adds shadow particles to a growing army that follows the user’s cursor using a flocking behavior algorithm. Accumulating sixty shadows triggers the “Monarch State” — a visual transformation representing mastery over the system’s chaos.

“SOLO_DEBUGGER nails the concept and theme,” Zaharnytskyi says. “The ‘defeat errors, build a shadow army’ loop is clear and fun, and the Monarch State moment feels earned. The constellation of particles and the Shadow Strike/Domain Expansion abilities show good follow-through on the Solo Leveling idea.”

The metaphor maps to adversarial training in machine learning — the process where a model learns by competing against an adversary that generates increasingly difficult examples. In generative adversarial networks, the generator improves by studying the discriminator’s rejections. In Solo Debugger, the player improves by studying error patterns and accumulating resolved challenges as persistent allies. Both systems convert opposition into capability.

Zaharnytskyi’s technical review reveals an MLOps engineer’s attention to the gap between documentation and implementation: “Zustand and Motion are used well, and the wave logic and rank progression are solid. The ‘Boids’ description is a bit ahead of the current implementation — the cursor-attraction motion works, but adding real separation, alignment, and cohesion between shadows would push it further toward emergent behavior.”

The gap between described and implemented behavior is where model drift lives in production ML. Model cards describe intended behavior. Monitoring dashboards reveal actual behavior. Solo Debugger’s shadow particles attract toward the cursor but lack the full Boids flocking algorithm described in its documentation — the same pattern as a production model that documents “handles edge cases via ensemble fallback” while the fallback mechanism remains unconnected in the inference graph.

“There’s more room to lean into instability,” Zaharnytskyi observes. “useEntropyStore, DriftingCard, and the visual instability engine could be wired into the Arena so low stability actually warps the UI more.” The recommendation reflects a core MLOps principle: connecting existing telemetry to existing visualization, closing the loop between measurement and presentation.

Adaptive Retraining in Real Time

FRACTURE by The Broken Being scored 4.30 in Zaharnytskyi’s evaluation — an AI-driven particle physics sandbox where destruction triggers evolution. Users draw structures, watch them fracture under physics simulation, and then GPT-4 generates entirely new physics rules based on what broke and how. The system does not reset to its original state after collapse. It evolves.

“FRACTURE is a strong fit for the hackathon,” Zaharnytskyi says. “The AI-generated physics, breaking mechanics, and collapse/reset cycle are well implemented and aligned with the theme. All five instability mechanics are present and clearly used. Adaptive rules and feedback from breaking stats create a dynamic system. Ghost traces and sound design make collapses feel meaningful.”

For an ML engineer, FRACTURE demonstrates the principle behind online learning and adaptive retraining — the practice of updating a model’s parameters in response to new data without taking the model offline. In production systems, this is how recommendation engines adapt to shifting user preferences, fraud detection models respond to new attack patterns, and autonomous systems adjust to changing environmental conditions.

The critical challenge in adaptive retraining is validation. When a model updates itself based on new data, how do you verify that the update improved the model rather than degraded it? FRACTURE faces the same challenge: GPT-4 generates new physics rules after each collapse, but those rules are not validated against a schema before being applied. Zaharnytskyi flags the gap: “Validate rules — call RuleSchema.parse(parsedRules) so Zod enforces the schema and catches bad responses.”

The recommendation translates directly to ML production. Model outputs in Zaharnytskyi’s infrastructure pass through validation layers before being served — type checks, range constraints, distribution comparisons against baseline predictions. A classification model that outputs a probability of 1.7 indicates a retraining failure that must be caught before reaching production. FRACTURE’s missing schema validation is the game-engine equivalent of serving unvalidated model predictions to users.

Emergence Without Central Control

Emergent Behavior Lab by team emergent takes a different approach from the other submissions. Rather than building a single system that collapses, it constructs three parallel simulations — Mutating Life, Adaptive Sort, and Emergent Language — each demonstrating rule-driven emergence without central coordination. Simple local rules produce complex global behavior. No component understands the system-level pattern it participates in creating.

“Emergent Behavior Lab fits the System Collapse theme well,” Zaharnytskyi explains. “Mutating Life, Adaptive Sort, and Emergent Language all show rule-driven emergence without central control. All three sims have clear feedback loops and emergent behavior. Mutating Life — rule mutation, entropy, events — and Emergent Language — signal learning — are particularly strong.”

The parallel to distributed ML systems is structural. In federated learning, individual devices train local models on local data and share only gradient updates with a central server. No single device sees the global dataset. No single device understands the global model. Yet the aggregate behavior — the global model — emerges from the interaction of local training runs that never directly communicate. Emergent Behavior Lab’s simulations capture this pattern: local agents following simple rules, producing system-level intelligence that none of them individually possess.

Zaharnytskyi’s review reveals the technical precision he applies to all evaluations: “In adaptive_sort.py, TimSort, ReverseAndMerge, and 3WayQuickSort are chosen but not implemented; execution falls back to QuickSort. Either implement them or remove them from choose_algorithm. In emergent_language.py, AI analysis blocks the main loop; use threading like in the other sims.”

The threading recommendation connects to a core MLOps principle: inference calls must never block the application’s critical path. In Zaharnytskyi’s OpenShift deployments, model inference runs asynchronously — requests are queued, predictions are computed on separate threads or GPU streams, and results are returned via callbacks. A synchronous inference call in a real-time application creates the same problem Emergent Behavior Lab has: the main loop freezes while waiting for a response from a remote model, and the user experience degrades. The fix is the same in both contexts — decouple the AI computation from the rendering or interaction loop.

The Monitoring Layer as Attack Surface

Mirror Mind by team MirrorMind scored 4.30 — an interactive ecosystem that learns the user’s drawing style, adopts their language patterns, and tracks a stability metric that governs collapse-and-rebirth cycles. The system watches the user, adapts to the user, and then destabilizes based on the degree of adaptation.

“MirrorMind has a strong concept and a lot of thought put into emergent behavior,” Zaharnytskyi says. “The canvas learning, phrase adoption, and stability-driven collapse are implemented and feel coherent.”

The project presents a model monitoring scenario in miniature. Production ML monitoring systems track model inputs (data drift), model outputs (prediction drift), and model performance (concept drift). Mirror Mind tracks user inputs (drawing patterns), system outputs (phrase adoption), and system state (stability metric). When the monitoring system itself becomes adaptive — when it changes behavior based on what it observes — the boundary between the system and its monitor dissolves.

Zaharnytskyi identified a critical gap: “There is a mismatch between the README and the code: the README mentions OpenAI for behavior analysis and autonomous actions, but there is no AI integration. The ‘AI’ actions are random choice. Either add real AI for analysis and autonomous actions, or change the README to describe the current random-based system.”

In MLOps, model documentation must accurately reflect what the system does, not what it was designed to do. A model card that claims “uses transformer-based attention” when the deployed model uses a logistic regression fallback creates a false security model. Engineers who believe they are running one system while actually running another cannot reason correctly about failure modes or drift patterns.

“The adaptiveRules.ts module contains useful logic — physics, colors, mutations — but is never imported or used,” Zaharnytskyi continues. “Wiring it into the particles and other components would make the cross-component feedback feel more real.” The observation describes a pattern instantly recognizable to any engineer who has audited an ML codebase and found feature engineering functions or ensemble components written, tested, and never connected to the production inference graph.

When Pipeline Failures Teach More Than Pipeline Successes

Across eleven evaluations, Zaharnytskyi applied the same analytical framework he uses in production ML operations: verify that documented behavior matches implemented behavior, check that feedback loops are connected end-to-end, ensure that validation exists at system boundaries, and confirm that asynchronous operations do not block critical paths.

The consistency of his feedback reveals something about the universality of MLOps principles. A feedback loop in a meditation application designed to collapse follows the same structural rules as a feedback loop in a model retraining pipeline. A missing schema validation in an AI-powered physics sandbox creates the same category of risk as a missing validation layer in a production inference service. Documentation that describes behavior the system does not implement undermines trust in both a hackathon submission and a deployed model.

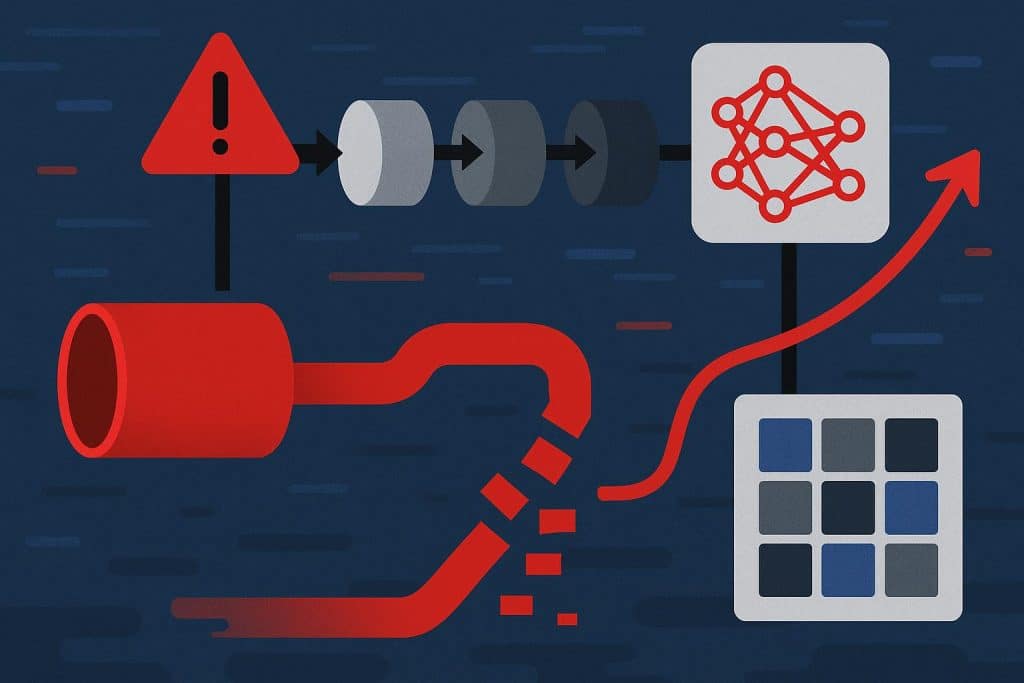

The projects that scored lowest in Zaharnytskyi’s batch shared a common failure mode: disconnected components. Described features that were not implemented. Adaptive systems that used random selection instead of learned adaptation. Monitoring surfaces that displayed metrics without connecting them to the behaviors they were supposed to govern. In ML production, this disconnection is the source of silent model degradation — the monitoring dashboard shows green metrics while the model serves increasingly incorrect predictions, because the metrics being monitored are not the metrics that matter.

What emerges from Zaharnytskyi’s evaluations is a transferable principle: systems that embrace drift require more engineering discipline than systems that resist it. A model that retrains online needs stricter validation than a model deployed once and left static. A game that evolves its own physics rules needs schema enforcement more urgently than a game with fixed mechanics. A system designed to collapse needs more careful monitoring than a system designed to remain stable — because the difference between intentional instability and unintentional failure is entirely a function of whether the engineer can distinguish between the two.

Seven years of building ML infrastructure — from military computer vision where model drift has operational consequences to enterprise LLM platforms on OpenShift where thousands of inference requests per second depend on pipeline reliability — has given Zaharnytskyi a framework for evaluating system integrity that applies regardless of whether the system is trying to succeed or trying to fail. The engineering that keeps a production model honest and the engineering that makes an artistic simulation compelling are, at their core, the same discipline: ensuring that the system does what it claims to do, and that you can tell when it stops.

System Collapse 2026 was organized by Hackathon Raptors, a Community Interest Company supporting innovation in software development. The event featured 26 teams competing across 72 hours, building systems designed to thrive on instability. Dmytro Zaharnytskyi served as a judge evaluating projects for technical execution, system design, and creativity and expression.