AI-powered answers are changing how people search online. Instead of browsing ten blue links, users now expect direct, conversational responses from tools like Google’s AI Overviews, Bing’s answer boxes, or chatbots such as ChatGPT and Gemini.

But this shift has also created a new attack surface for fraudsters. By exploiting AI systems’ dependence on online content, scammers can plant malicious links, impersonate brands, and mislead users inside the very answers they trust the most.

How AI Answers Work

Traditional search lets users scan ranked results and choose where to click. AI-generated answers simplify that process into one synthesized response, often without showing clear sources.

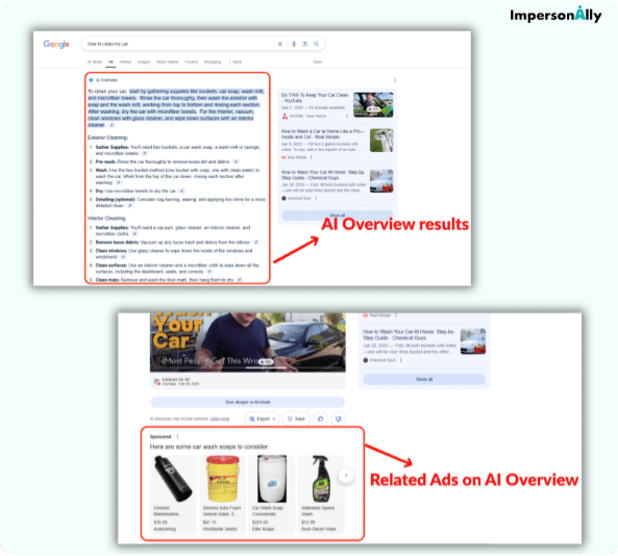

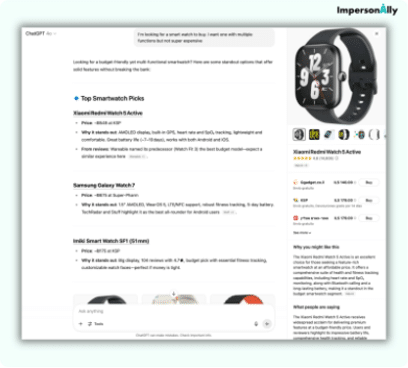

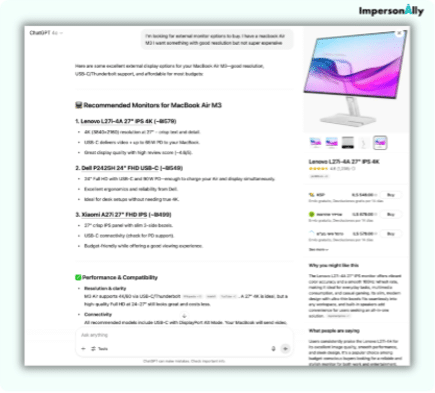

These systems scrape web pages, forums, and training data to generate unified answers. Ads are now being blended in as well: a search like “How to clean my car” might return a summarized guide alongside shopping placements. Similarly, queries on ChatGPT about “the best smartwatch” may include curated recommendations.

The efficiency is undeniable, but the lack of transparency opens the door for manipulation.

The New Threat: Malicious Links in AI Results

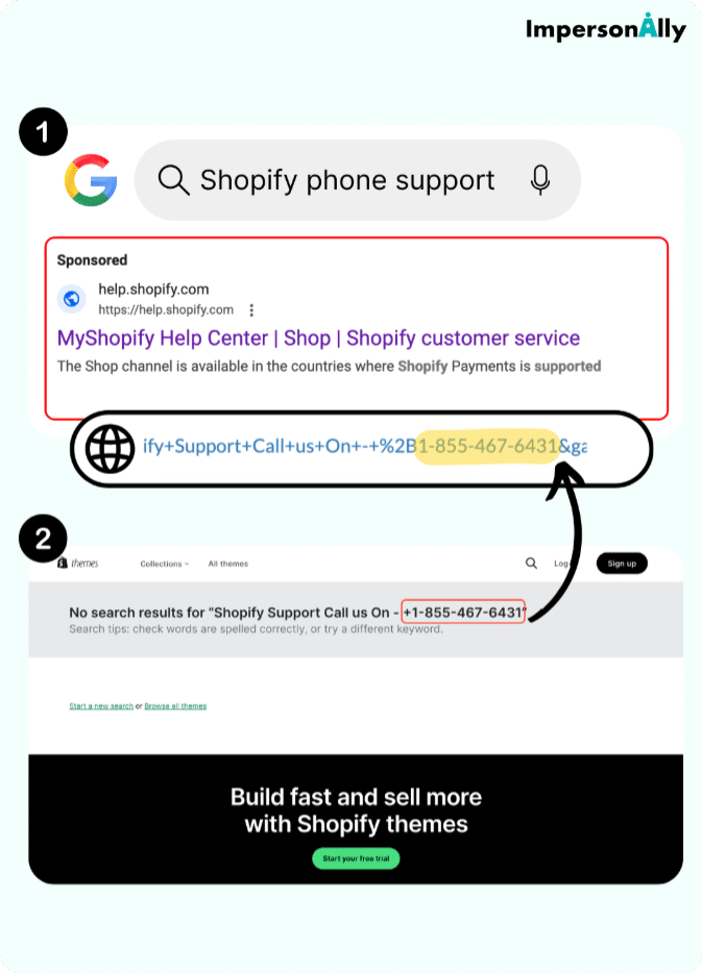

Because AI systems rely on whatever data they ingest, scammers can deliberately plant fraudulent content. Imagine searching “recover my Shopify account” and finding a link in an AI Overview that looks official but leads to a phishing site.

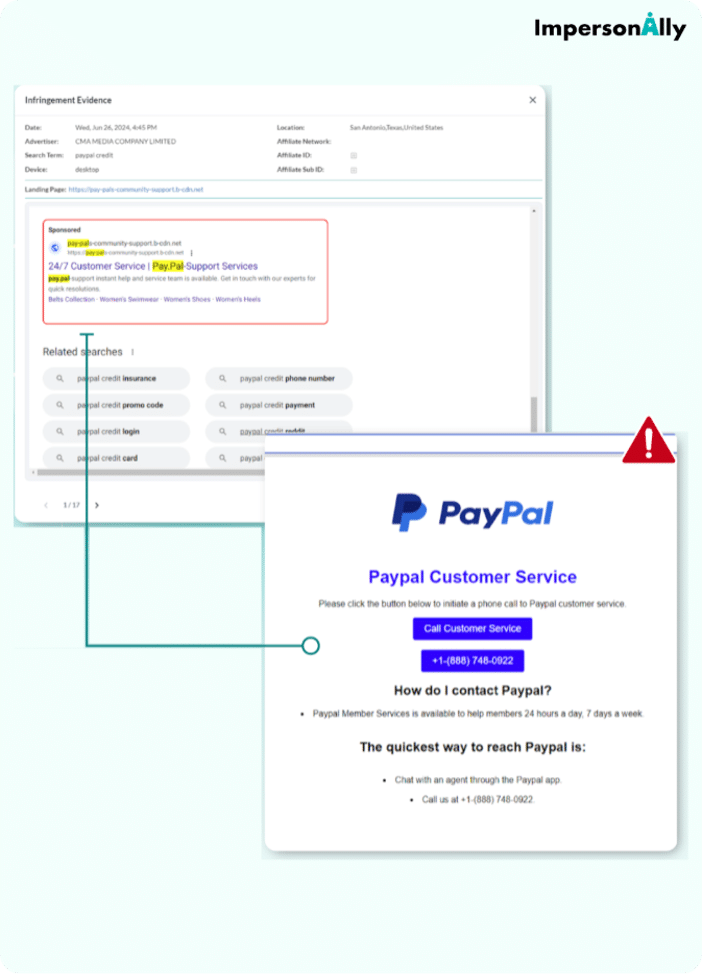

This isn’t hypothetical. Researchers have shown how prompt injection attacks trick chatbots into generating malicious links. Google’s AI Overviews have already been criticized for surfacing unsafe or absurd outputs, while firms like CloudSEK have reported hundreds of cloned PayPal and Shopify domains built to fool both humans and AI systems.

The risk is magnified by trust. When a link appears in an AI-generated answer, users are far less likely to question it.

How Scammers Exploit AI Answers

Fraudsters are adapting classic black-hat techniques to the AI era.

One is prompt injection, where hidden instructions manipulate chatbots into producing spammy or unsafe URLs. Another is the use of SEO-optimized lookalike sites, fake domains that mimic trusted brands with copied logos and keyword-rich text. Because they appear relevant, AI systems may summarize them as if they were legitimate.

Cloaked URLs are equally effective. Scammers show safe content to AI scrapers while redirecting human users to phishing pages. Forums and user-generated platforms are another weak point. Fake “advice” posts seeded on Reddit or Quora can be scraped and presented as authentic recommendations.

And as Google experiments with ads inside AI Overviews, paid ad abuse is rising. Fraudsters can bid on branded terms, placing fake support ads directly next to AI-generated answers, further blurring the line between authentic and fraudulent results.

The common thread is that AI systems prioritize relevance and semantic match over authority signals. That makes it possible for even brand-new scam domains to slip into answers if they are well optimized.

Why This Matters for Brands

For enterprises in travel, e-commerce, and retail, the risks are significant. Fake answers don’t just cost clicks, they erode trust and shift blame onto the legitimate brand. Customers who fall for scams rarely fault the AI platform; they hold the business responsible.

The fallout is costly. Brands may face a surge in support tickets from defrauded customers, legal risks if users suffer financial harm, and lost revenue to fraudulent competitors who intercept buyers at the point of intent. Meanwhile, real brand websites risk being buried while scam links are surfaced.

In industries where trust is fragile, the damage can be lasting.

How Brands Can Respond

To stay ahead of these threats, companies need to adapt their protection strategies.

The first step is to monitor how your brand appears in AI systems. This includes AI Overviews, chatbot responses, and paid placements. Specialized tools like Adobe’s LLM Optimizer or ImpersonAlly’s Map Search help enterprises see how their brand terms surface across regions, and whether fraudsters are hijacking them.

Next comes Generative Engine Optimization (GEO), he practice of optimizing content for AI parsing. By ensuring that support pages, FAQs, and help hubs are structured and updated, businesses improve the odds that AI will surface legitimate information instead of a scam.

Paid search must also be watched closely. Fraudsters can and do bid on branded terms to sneak fake support ads into AI answers. Protecting those keywords is critical to preventing impersonation. Finally, brands should educate customers on how to identify official support channels, reducing the likelihood of scams succeeding.

Conclusion

AI-generated answers are rewriting the rules of search, but they’re also rewriting the rules of fraud. Scammers are exploiting the trust users place in these systems, planting malicious links and impersonated content at unprecedented speed.

For enterprises, the challenge is no longer just ranking in search. It’s ensuring that when AI systems summarize the web, they present safe and accurate information about your brand. Because when users see a link inside an AI answer, they don’t question the algorithm, they trust it.

And in today’s digital economy, trust is the most valuable asset your brand can protect.

To learn more about how enterprises can detect and stop brand impersonation fraud in real time, visit ImpersonAlly.