As AI models become more sophisticated, distinguishing between human and machine text is becoming one of the hardest challenges in computer science. Users often ask: How does Lynote.ai actually work? How can a piece of software tell if a text was written by GPT-4o or a human being?

The answer lies in the mathematics of language. While AI can mimic human style, it cannot replicate the chaotic nature of human thought.Lynote.ai leverages advanced Natural Language Processing (NLP) to analyze these hidden patterns.

The Core Metrics: Perplexity and Burstiness

To understand Lynote.ai, you must understand the two pillars of AI detection:

1. Perplexity (The Measure of Surprise)

AI models are, at their core, prediction engines. They are trained to predict the most statistically probable next word in a sentence. As a result, AI writing tends to have low perplexity—it is smooth, logical, and mathematically “average.”

Humans, however, are unpredictable. We use slang, unexpected metaphors, and creative phrasing. Our writing has high perplexity. Lynote.ai measures this “randomness” to determine the likelihood of machine generation.

2. Burstiness (The Measure of Rhythm)

Humans write in bursts. We might write a long, complex sentence with multiple clauses, followed immediately by a short, punchy sentence. This variation is called Burstiness.

- Human Text: High variation in sentence length and structure.

- AI Text: Monotonous, uniform sentence structures.

Lynote.ai visualizes this rhythm. If a document shows a “flatline” in burstiness, it is a strong indicator of AI generation, even if the grammar is perfect.

The Hardest Challenge: Paraphrased Content

Most free detectors fail when users apply “paraphrasing” or “humanizing” tools. These tools swap synonyms to trick the perplexity check.

Lynote.ai overcomes this with Semantic Continuity Analysis. Instead of just looking at individual words (which can be changed), Lynote.ai analyzes the relationship between ideas. AI models tend to structure arguments in a linear, formulaic way. Even if you change every third word, that robotic logical structure remains. Lynote.ai’s neural networks are trained on millions of examples of “adversarial text” (text designed to fool detectors), allowing it to spot these hidden signatures with 99% accuracy.

Reducing False Positives with Context

A high accuracy rate is useless if the tool flags innocent writers. This is known as the False Positive Rate (FPR).

Standard detectors often fail because they treat all text the same. Lynote.ai uses a Context-Aware Engine. It understands that a legal contract or a medical report will naturally have low burstiness (because they are formal and structured). It adjusts its sensitivity based on the document type and language context.

This capability is crucial for professional users. Whether you are checking a technical manual or a creative novel, Lynote.ai applies the correct baseline for analysis, ensuring that “formal” is not mistaken for “fake.”

Conclusion

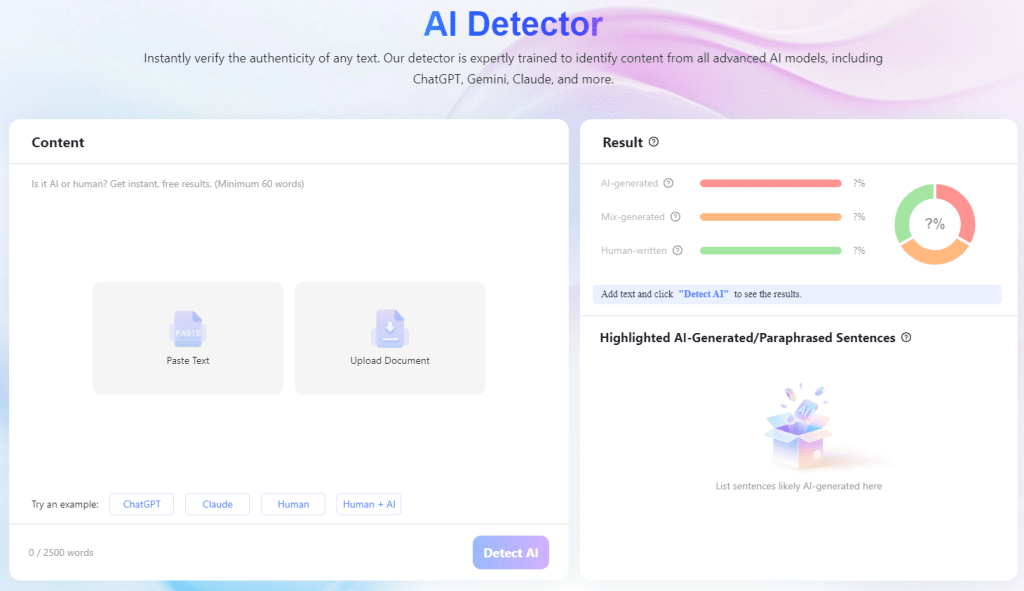

AI detection is not a guessing game; it is a rigorous forensic science. By combining perplexity analysis, burstiness metrics, and deep semantic learning, Lynote.ai provides a transparent and reliable way to verify the authenticity of digital content.