It is possible for a measurement to be exact without being accurate or accurate without being precise. A manufacturer needs accuracy and precision in order to feel certain about the calibre of their products. Bewildered? Are you curious about the distinction between precision and accuracy? Continue reading.

This is the account of an old story:

Every day, a guy passes a watch store on his way to work. This store promotes a certain clock that is supposedly the most accurate ever produced. The man is serving in the army, and one of his duties is ceremonial. Every day at noon, he must shoot a pistol into the air from the city’s tallest point. He adjusts his watch in accordance with this extremely precise clock as he passes the clock store to ensure he discharges the gun at the same time each day.

After a few years, the man makes the decision to visit the watch store and enquire about this incredibly precise clock. What is it composed of? How does it operate? Lastly, how is the owner so certain that it is accurate?

“A soldier fires a gun from the top of the hill every day at noon, and this clock has never deviated, never lost a second,” the merchant adds. I know that way.

Since both the clock and the gun’s firing result in a repeatable and consistent outcome, we may conclude that they are both exact. Every time the guy pulls the trigger, the clock strikes twelve. They’re not accurate, though. Because the clock and pistol are not properly calibrated, the measurement in this instance is biassed.

Accuracy vs precision explained

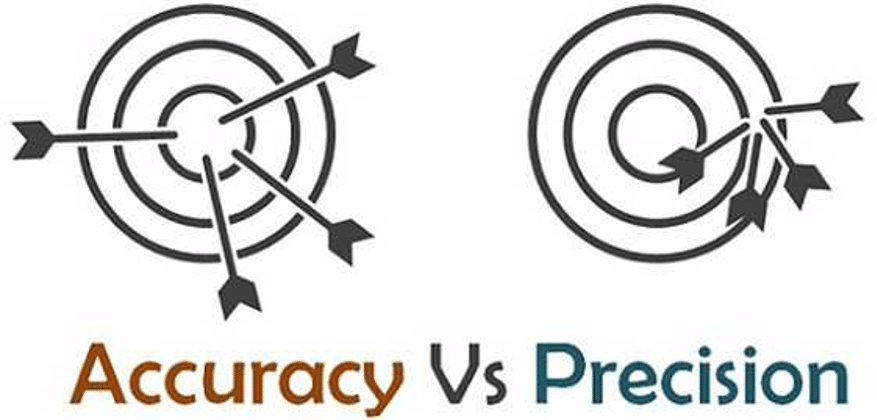

Although we frequently use the phrases “accuracy” and “precision” interchangeably in non-scientific contexts, they are not synonymous. Both are equally crucial for taking a valid measurement. What distinguishes precision from accuracy, then?

- The degree to which a measured value resembles a “true value” is known as accuracy.

- The capacity of a measurement to be reliably replicated is known as precision.

Getting the right response is what is meant by accuracy. Being precise implies consistently receiving the same response.

If a learner adds up 1 + 1 and obtains an answer of 2 they are accurate. The same student would need to add up 1 + 1 several times in order to get the same result, which would be correct and precise. They are exact, but obviously not accurate, if the same kid consistently receives a score of 3.

Let’s go back to our clock analogy. Consider a dishonest manufacturer that advertises a watch that is 100% accurate but neglects to disclose that it never provides an exact reading. It tells the right moment one day. The next day it’s a few seconds slow. It’s quick today as well. The watch is completely accurate if you average readings over a month, but the owner will never know the exact time at any one time.

Why Accuracy and Precision Are Crucial in Manufacturing

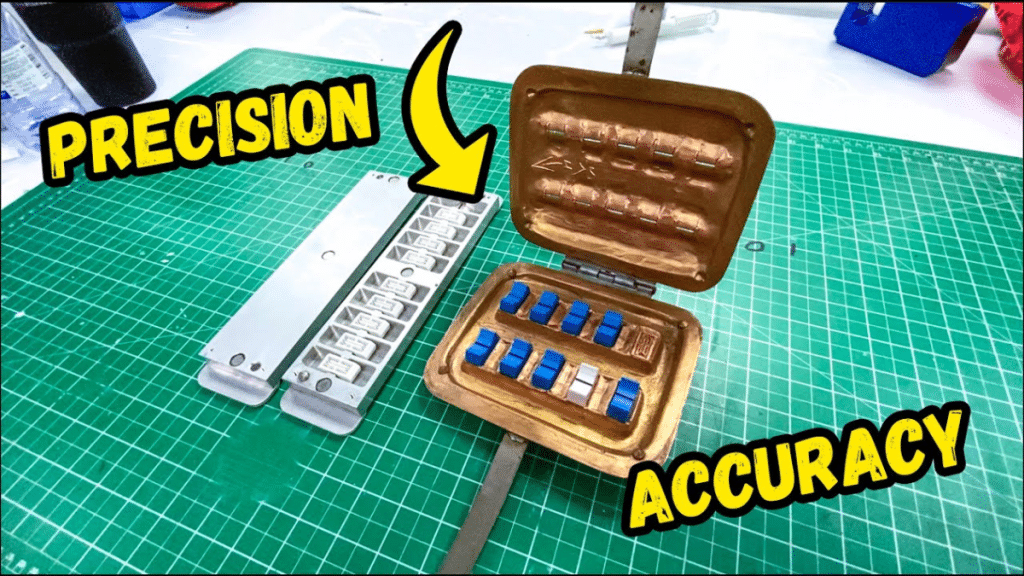

Precision Matters In Manufacturing

Because it directly affects both operating efficiency and product quality, precision is important in manufacturing. Even little errors in precision manufacturing can result in expensive reworks, material waste, and delivery delays. We guarantee that parts and products fulfil exacting requirements by using precision machining processes, which promotes output consistency.

Advanced manufacturing methods are built on a foundation of precision engineering. Prioritising accuracy reduces variations that might jeopardise final items’ functioning or performance. In addition to cutting waste, this dependability raises customer satisfaction by providing superior solutions that either match or surpass expectations.

Better process control results from a dedication to accuracy. Workflows are streamlined by precise measurements and painstaking attention to detail, which speeds up production. Furthermore, our teams’ culture of excellence is fostered by precise production techniques, which inspire innovation and ongoing progress.

In production, accuracy is crucial. It has an impact on customer trust, efficiency, and quality, which ultimately impacts our long-term performance in the highly competitive manufacturing sector.

Importance Of Accuracy

In manufacturing, accuracy is essential as it has a direct impact on the calibre of the final product and the effectiveness of operations. Accuracy is crucial since little differences might cause serious problems.

Quality Control

Strict criteria are met by items thanks to precision machining. To find any errors early in the production process, we employ strict quality control procedures. This focus on detail improves overall product reliability, lowers faults, and preserves consistency.

Cost Efficiency

Waste is minimised and expensive reworks are less likely with precision engineering. Investing in precise manufacturing methods allows us to maximise resource use and shorten production schedules. Our activities become more competitive in the market as a result of this efficiency, which also improves delivery schedules and reduces expenses.

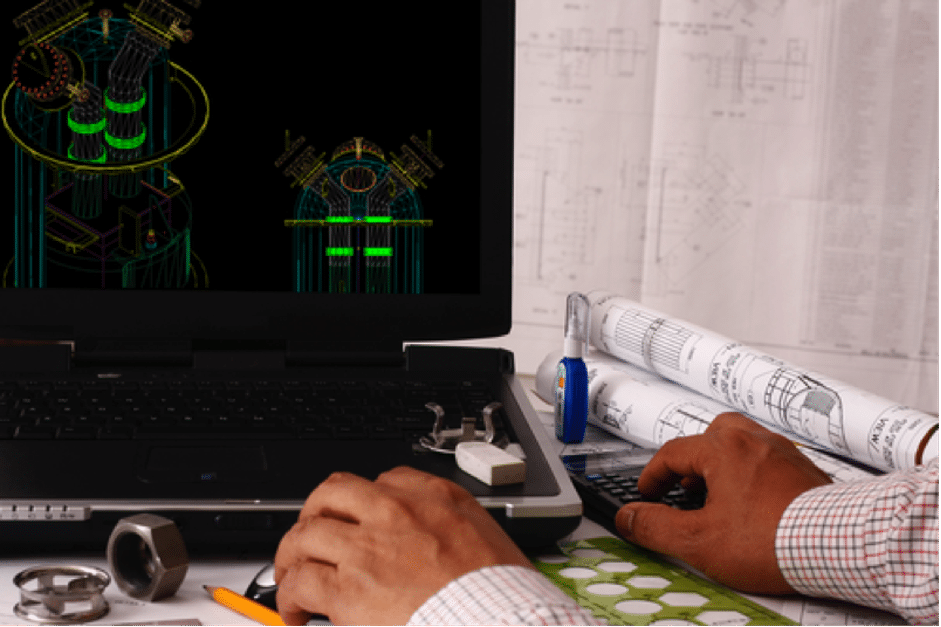

Tools and Techniques for Enhancing Measurement Accuracy

The bad news is that, although it is obviously preferable to be 100% exact and precise, this is not achievable in practice. Because of variables outside our control, such as industrial test equipment, the surroundings, and the lab staff, there is always some non-zero variability.

Having said that, there are several ways we might optimise precision and accuracy in our research. Let’s hear the drum roll!

Ways to Improve Your Accuracy and Precision in the Lab

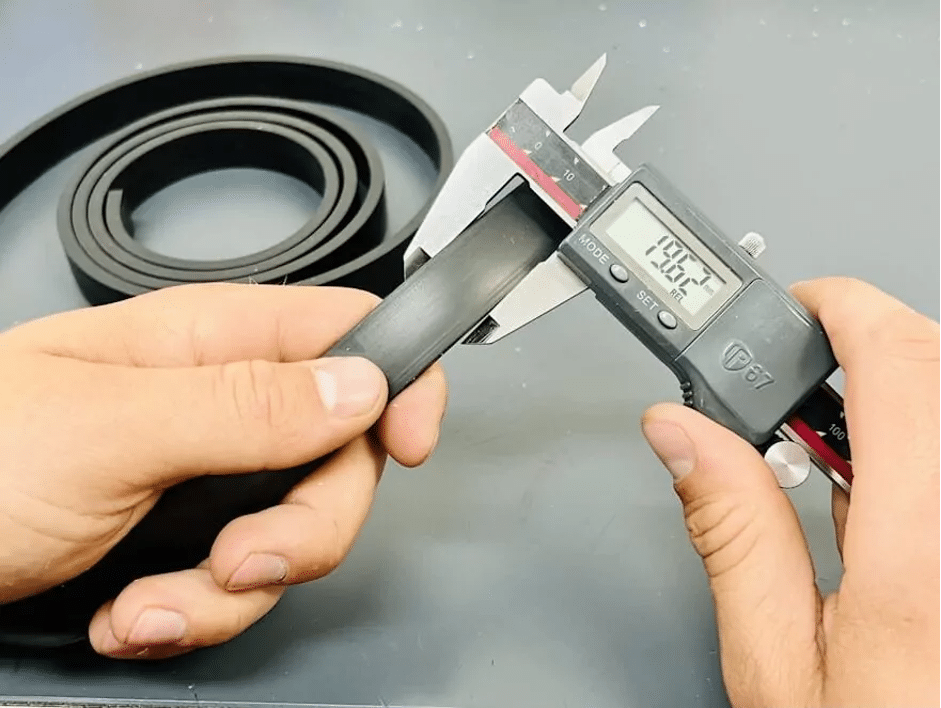

1. Keep EVERYTHING Calibrated!

The reason calibration is at the top of this list is because it is the most crucial way to make sure your measurements and data are accurate.

Adjusting or standardising lab equipment to make it more accurate AND precise is known as calibration.

Usually, calibration entails comparing your instrument’s measurement to a standard and modifying the device or software as necessary.

2. Conduct Routine Maintenance

It’s likely that your lab’s equipment requires routine maintenance to function at their highest level of accuracy and precision, even if they are completely calibrated.

For example, pH meters require regular maintenance that even inexperienced scientists can accomplish, while more delicate equipment would need components shipped to vendors or even visited on-site.

3. Operate in the Appropriate Range with Correct Parameters

Always utilise instruments that are calibrated and made to operate in the range that you are dispensing or measuring. For instance, since most spectrophotometers cannot handle optical density (OD) values this high, avoid attempting to measure OD600 above an absorption of >1.0! Seek guidance from a mentor or trustworthy peer if you are ever uncertain about utilising an instrument to measure precisely at an extreme value.

4. Understand Significant Figures (and Record Them Correctly!)

It’s crucial how many significant figures (also known as “sig figs”) you utilise and document. The degree of uncertainty connected with values is specifically provided by sig figs.

When measuring objects frequently, make sure the quantity of sig figs you use is adequate for each measurement and maintain consistency in the sig figs.

5. Take Multiple Measurements

The more samples you collect for a certain property, the more accurate your measurement will be. You can compensate by increasing the number of replicates in cases where sampling is destructive or numerous observations are not possible (such as growth rates in a culture).

Accuracy vs. Tolerance: What’s the Difference?

Accuracy

It is the degree to which UUC findings closely resemble the STD (true) value. Usually expressed as a percentage number (%), this “closeness” can also be displayed in the same unit by converting it to an error value (%error). The accuracy increases with the percentage value’s proximity to ZERO (0%). Accuracy does not provide a precise value because it is more of a qualitative description. The percentage error (%error) is the equal of it. The value of error will be applied here.

Tolerance

It is the greatest amount of error or variation that may be permitted or tolerated in the user’s design for the manufactured product or its constituent parts. A range of results that the user finds acceptable or allowable from the process or product measurement is known as tolerance. The allowed mistake is as follows:

1. Based on the user’s calculation of the process design

2. Regulatory agencies’ prescriptions (depending on Accuracy Class)

3. Accuracy-based manufacturer standards

Upper limit – lower limit (UTL-LTL) is the formula. The value depending on tolerance limitations is either UTL or LTL. Limits of tolerance = Tolerance/2. Tolerance Limits might be derived from process requirements or manufacturer specifications. If a measurement is made, the tolerance limit value will indicate whether or not the measurement is appropriate.